Redis Stories & Architectural Debates

Table of contents

- Can Redis be used as a primary database?

- What is Redis (Remote Dictionary Server)

- What Qualifies a Primary Database?

- Does Redis Check the boxes?

- Isolation ✅

- Redis as a Primary Database - Success Story

- Redis features beyond caching and towards everything!

- Redis Modules

- Why use Redis as a primary database?

- Cost Concerns

- References

Can Redis be used as a primary database?

let's debate!

What is Redis (Remote Dictionary Server)

Redis is an open-source in-memory storage, used as a distributed, in-memory key–value database, cache and message broker, with optional durability.

Redis popularized the idea of a system that can be considered a datastore and a cache the same time. It was designed so that data is always modified and read from the main computer memory.

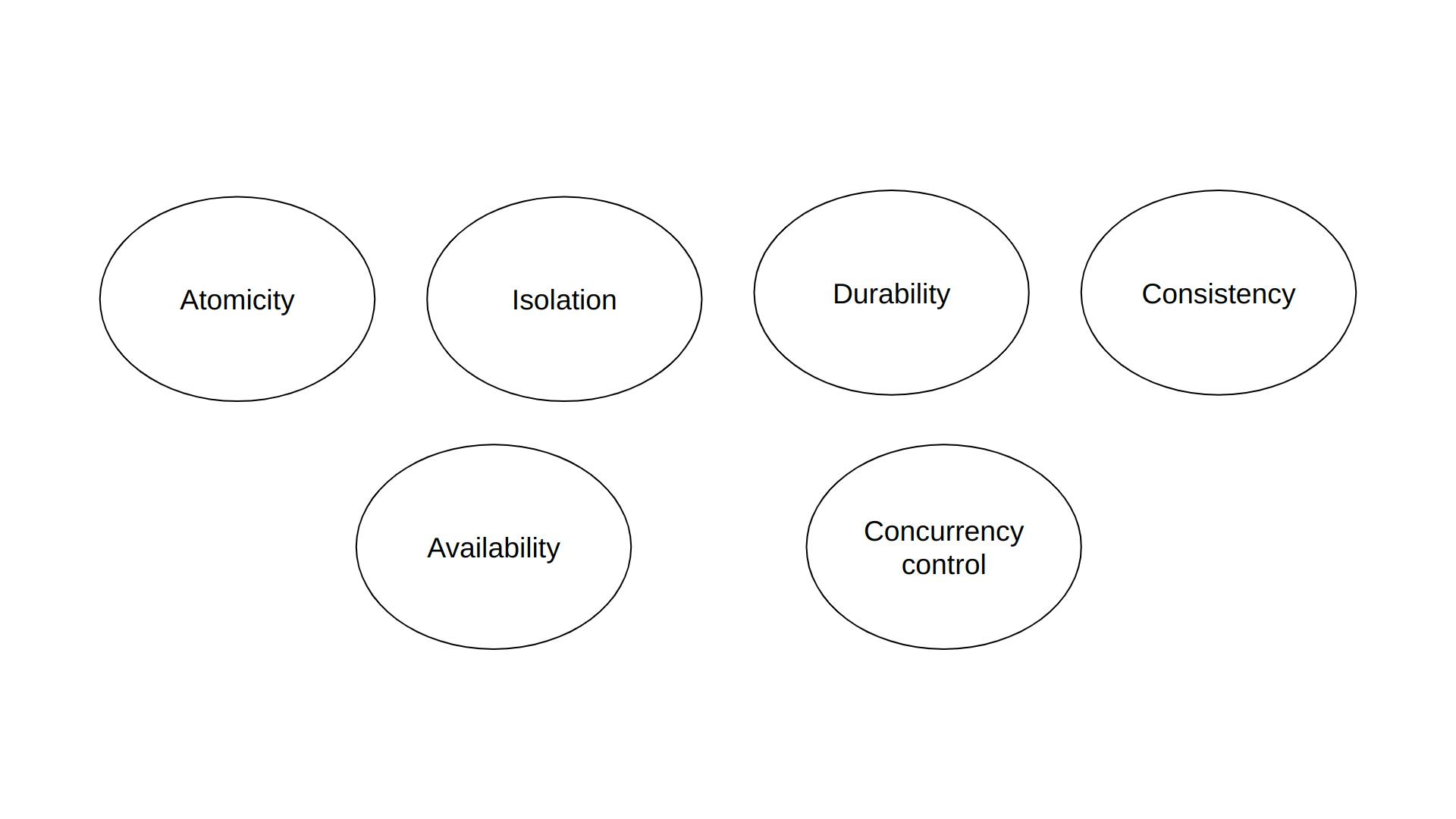

What Qualifies a Primary Database?

You'll probably think about those, among other preferences, but those are the deal breaker! who wants a database without durability?

Atomicity

Individual statements in a transaction should all succeed or fail.

Isolation

How isolated a given transaction is from changes made by other concurrent transactions.

Durability

Writes committed by a transaction must persists on a storage medium, even in case of a failure.

Consistency

Data is consistent; if it appears the same in all corresponding nodes at the same time, regardless of the user and where they are accessing the data, geographically.

Availability

The spectrum by which a read or write transaction gets a successful execution

Concurrency Control

What happens when a transaction tries to change a value that actually has been changed since the transaction started (do you block the change or allow it and fail later?)

Does Redis Check the boxes?

Atomicity ✅

Redis is Atomic you can make multiple commands then commits them all at once.

Isolation ✅

Redis uses serializable, if you're executing two commands at the same time they will be serializable exactly after each other.

Durability ✅

Redis supports Snapshots that can be configured on a timely manner: Asynchronously all writes will be flushed to disk - Faster but there is a failure possibility

Redis uses AOF (Append Only File): Any committed transaction goes into the AOF

High durability

Slower but guarantees durability.

Configurable! You can combine both methods.

Consistency ✅

Redis supports two configurable ways of consistency

Weak Consistency: The acknowledgment is sent back to the application (or CLI) before the writes on all replicas and acknowledgements the write to the master shard

Faster reply

Guarantees eventual consistency

Strong Consistency: The acknowledgment is sent back to the application (or CLI) after all the replicas writes acknowledgements are sent back to the master shard

Slower reply

Guarantees consistency

Availability ✅

Redis supports clustering (primary, and read replicas)

Redis supports sharding

Concurrency Control ✅

Redis is single threaded to each client (there is no truly concurrency except in snapshots), so how does it achieve concurrency control between clients?

Optimistic Lock: Achieved using the WATCH command (you WATCH a key, and if someone else tries to change it’s value, the commit will fail, which means you need to retry)

Starting from Redis 6 Redis is multi-threaded!

Redis as a Primary Database - Success Story

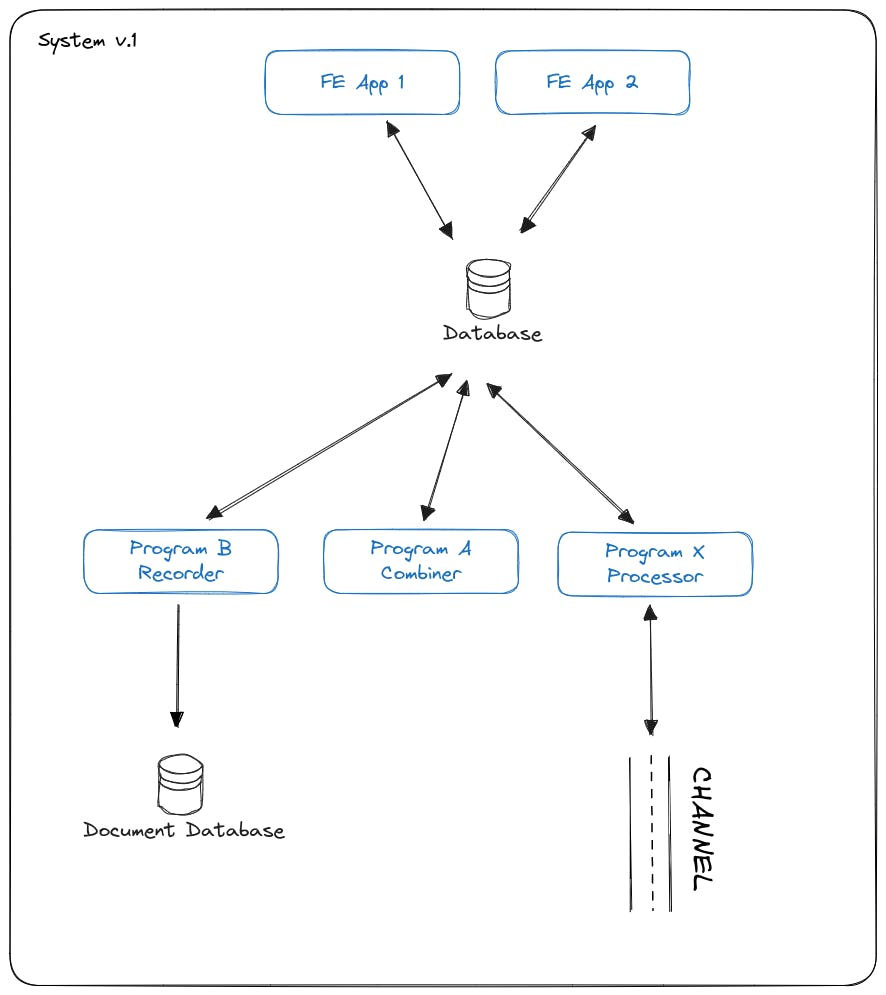

Once upon a time, there was a system simple and working fine, (we'll simplify the system to the parts that we are interested in only).

The system components

Program X - Processor

- Reads & Writes hundreds of rows each second into tables A&B

Program A - Combiner

Each second

Reads all rows from tables A & B

Processes both

Write into table X

Program B - Recorder

Each second

takes a snapshot from selected table X

Writes it to a document database document

Front-end App (Desktop application)

Each second

Polls the database to get all the rows to update the rendering

Reads from one table or multiple or all of them (A, B, X)

Relational Database (MySQL)

- Acts as a communication channel between all programs.

NoSQL Database (MongoDB)

- For recording the system state

The system was critical and must have the least possible delays from the message arriving to the channel, until the FE updates it's rendering, this whole process should not take more than 1 second for any update/new message, The messages coming through a channel must be processed with the same order.

We used MySQL as a primary database, and Mongo as a recording database

And it worked fine ... At this scale, where the messages coming from the CHANNEL is limited, and there is only 1 channel and one program X with a maximum of 1000 row in table X and updates for these rows coming each second through CHANNEL.

Clearly the system had many flows, but it worked! for a long time actually, and believe me the cost of rebuilding it just because it's ugly wasn't worth it, Until it was!

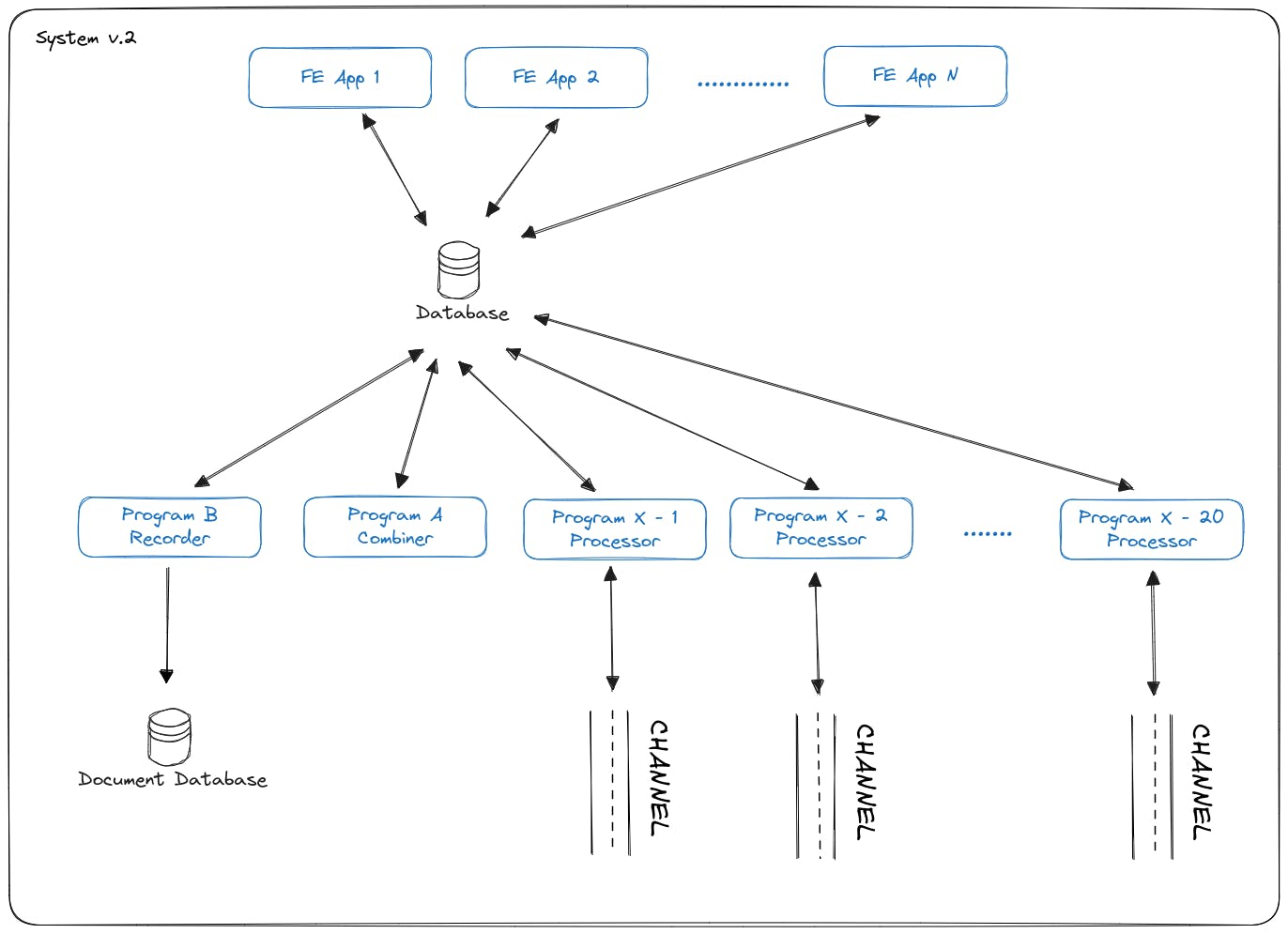

New Requirements!

Multiple channels up to 20

Multiple FE apps (should not be limited to any number)

Program A is now required to read then process then combine all 20 X programs outputs

Maximum total rows in table X is 2000

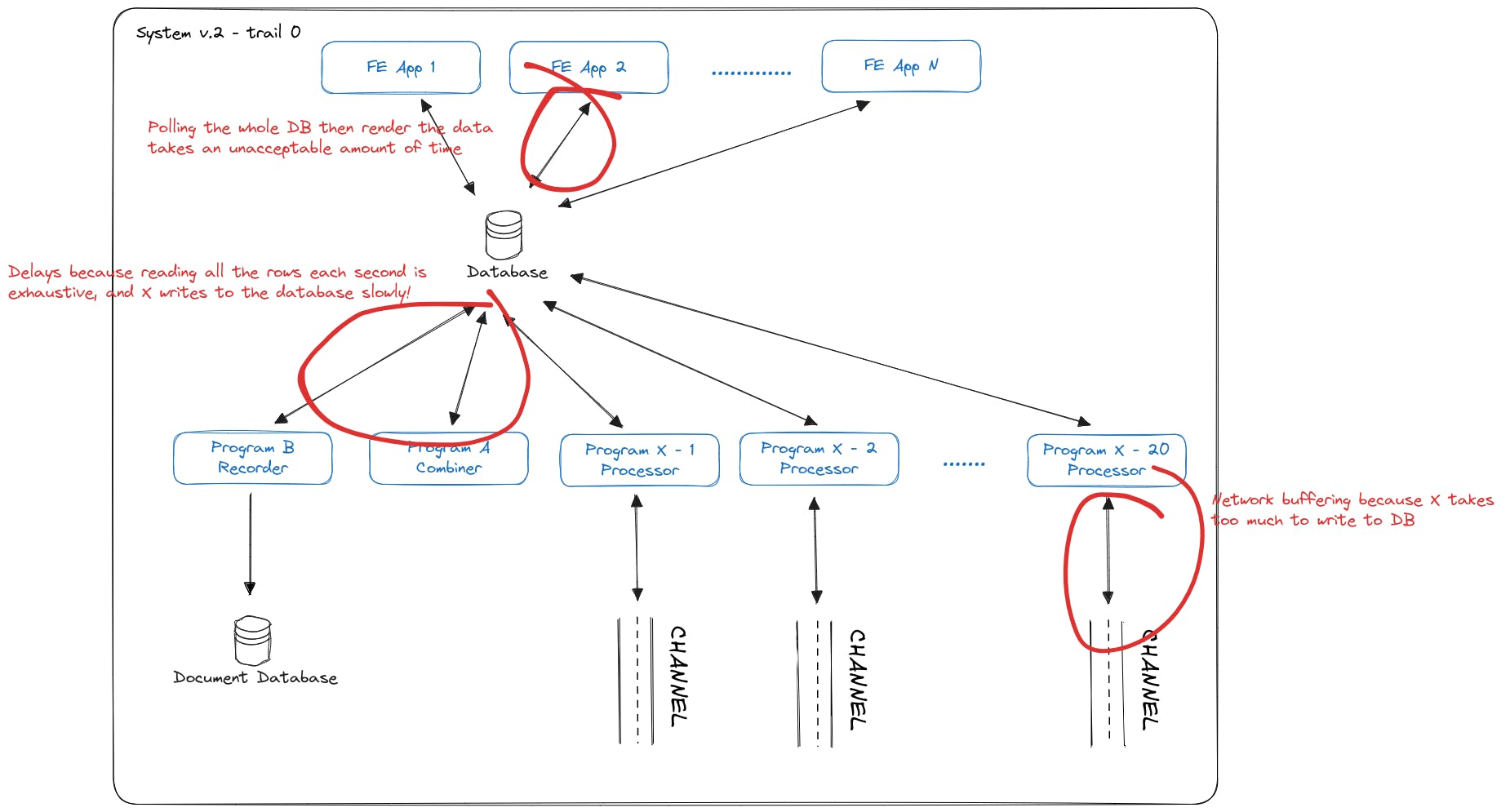

We knew this wouldn't work! but we tried anyways ... and as expected, we had MASSIVE DELAYS!

So we decided to finally do the talk!

The talk that we should've had from the beginning

The Talk

What are the issues in this design on that scale?

Network buffering because Program X takes so much time writing to a relational database and waiting for the transaction to complete before processing the next msg

FE Apps database polling will makes it explode and eventually slower rendering

The Combiner program takes much more time for processing all these program X inputs thus, more DEALYS!

From here, we knew we needed to solve the polling issue using a publish subscribe queue strategy because FE apps only needs the new updates

And we also needed to write and read to/from the DB much faster than before, So, with maximum of 2000 entry, we can write to memory! (ofc, we'll need to use some SSDs to extend the RAM because there are many tables) We decided we need caching

The next chapter was lots of failure ideas We'll talk about the next architecture using system.v1 just for simplicity

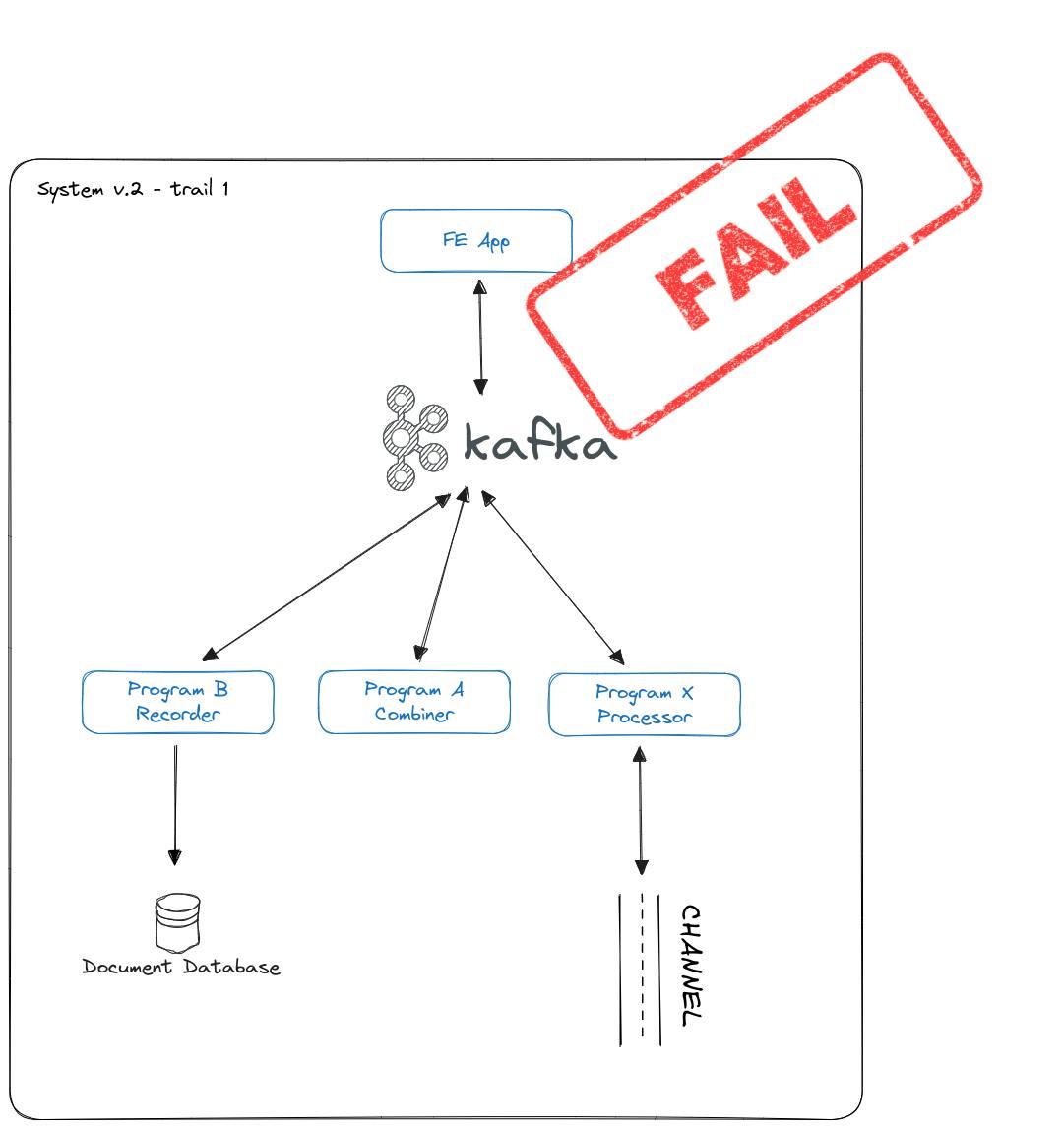

Trail 1

The network buffering issue will get solved

But still the issue of the Combiner program that it needs to read and write so much so quickly isn't solved.

Because we needed a way to read previous data in a feasible way, for the combiner program and Kafka was really complex for this use.

Trail 2

The network buffering issue will get solved and the FE polling issue too! ✅

But still the issue of the Combiner program that it needs to read and write so much so quickly isn't solved

And let's take into consideration how complex Kafka is, specially when trying to using from a Cpp existing code base

And let's take into consideration how complex Kafka is, specially when trying to using from a Cpp existing code base

After lots of discussions and different designs

Including:

using a simpler queue service with a document database for persistence

using a simpler queue service and a caching service and a document/relational db for persistence

what if we replaced the relational db with a document db?

Do we need the relational db features? Can we let go of these features or do rely on them? the answer was we don't!

We decided to use Redis as a primary Database!

The network buffering issue got solved because we're writing to the memory not to the disk! ✅

The FE polling issue got solved and now as many FE apps as we need can subscribe and get the updates ✅

The Combiner program can now read and write very fast ✅

The recorder program can easily take snapshot and from document db to document db! ✅

The data is still persistent, thanks to Redis snapshot feature! ✅

AND WE GOT OUR HAPPY ENDING 🎉

Redis features beyond caching and towards everything!

Redis Search

A query and indexing engine for Redis, providing secondary indexing, full-text search, and aggregations.

Redis Pub/Sub

Messaging technology that facilitates communication between different components in a distributed system. This communication model differs from traditional point-to-point messaging, in which one application sends a message directly to another. Instead, it is an asynchronous and scalable messaging service that separates the services responsible for producing messages from those responsible for processing them.

Redis Graph

A graph database as a Redis module.

Redis Time Series

Time series data structure for Redis.

Redis AI

A Redis module for serving tensors and executing deep learning graphs

And many more! Thanks to Redis modules.

Redis Modules

Redis modules make it possible to extend Redis functionality using external modules, rapidly implementing new Redis commands with features similar to what can be done inside the core itself.

Redis modules are dynamic libraries that can be loaded into Redis at startup, or using the MODULE LOAD command.

And since Redis is open source, and developers love open source, they release new interesting modules here

Why use Redis as a primary database?

Okay, Redis is great and fast, but is it worth it?

Let's say we have a complex social media application with millions of users. For this, we may need to store different data formats in different databases:

Relational database, like Mysql, to store our data

ElasticSearch for fast search and filtering

Graph database to represent the connections of the users

Document database, like MongoDB to store media content shared by our users daily

Cache service for a better performance for the application

It's obvious that this is a pretty complex setup.

Using Redis resolves this complexity by replacing each of the above components with either a built in feature in Redis, or a Redis module.

You will run and maintain just 1 data service. So your application also needs to talk to a single data store and that requires only one programmatic interface for that data service.

In addition, latency will be reduced by going to a single data endpoint and eliminating several internal network hubs.

Cost Concerns

Why you in most cases should not use Redis as a primary database, well, it's expensive!

After all you probably need to really consider migrating, there is always a down side, and with Redis it’s the RAM cost, and of course the migration cost!